The Rise of Machine(s)

“The AI revolution in 2023 brought transformative changes to industries and daily life. Intelligent automation and advanced algorithms improved efficiency in manufacturing, healthcare, finance, and transportation. Natural language processing has led to the emergence of sophisticated voice assistants. Breakthroughs in image recognition and sentiment analysis revolutionised fields like autonomous vehicles, cybersecurity, and personalised marketing.”

Well, I didn’t write any of it, ChatGPT did.

So today we are going to explore if we can take advantage of machine learning to help build better embedded systems.

This article is a continuation of a previous blog post in which, I hacked a synaptics touchpad.

We will use the touchpad as a base to get input for our neural network model.

Machine learning is all about building and training models, which are essentially neural networks, to make predictions about what some input to the model might be.

This information (the prediction) can then be used to do various cool things.

“Infrerence running on an $8 ARM Cortex-M7 MCU on a 28x28 image using LSTM model in under 7ms with more than 96% accuracy.”

The process of running ML models on a MCU can be divided into three parts:

- Recording data from various sensors to be used as the input to our network.

- Modelling and training the network with the recorded data.

- Deploying and running inference on the trained model using new real world data.

Although some ML frameworks allow you to train the model on the MCU, most sane people will use a much powerful host computer or a web ML platform (Google Colab) to make and train the NN model.

How you run the inference of the trained model on the MCU depends on which ML framework you have chosen to use.

So, How is it done?

For making and training the model, TensorFlow is used almost exclusively.

I have used Keras, which gives us an easy to use python interface for accessing the TensorFlow library.

You can also use Google Colab Platform to make and train the model without having to deal with installing keras and tensorflow locally on your machine.

For running inference of the trained model, you have many options depending on which MCU you use.

There are quite a few ML frameworks available for MCUs, some manufacturers provide their owm ML frameworks for running models.

Some popular ones are:

- TensorFlow Lite for Microcontrollers. (TFLM)

- AIfES

- DeepViewRT (NXP)

- STM32Cube.AI (STM)

I have used both TFLM and AIfES, both are quite capable but TFLM has more features and support for more types of Ops but requires c++ support and is complex,

AIfES on the other hand is quite easy to use and is written in C, but lacks features.

Also, you can directly import tflite models into TFLM, whereas AIfES requires you to extract weights and biases from your trained model and import them separately which is quite tedious.

Step 1: Collect the data.

The synaptics touchpad is the sensor that we will use to collect data for our ML demo.

The touchpad outputs data over PS/2 which is captured by our MCU.

The touchpad reports us with the absolute position of our finger when we touch it, we treat every position reported to us as a pixel coordinate in an image whose size is given by the resolution of our touchpad.

Whenever a touch is reported we just fill the pixel coordinate with a value of 1 in our image array.

This specific model of touchpad has a resolution of near about 5600 x 4600 which is too big for even an average modern single GPU to run real time inference on let alone our MCU, so we need to scale down this resolution to something our MCU can handle.

For digit recognition, there is a well known dataset of small handwritten digits called MNIST dataset, we will use it as a starting point as it will provide us will a good large pre-tested dataset to train our network on.

The resolution of images in the MNIST dataset is 28x28, perfectly within the bounds of compute of our MCU, so we will scale down our touchpads 5600x4600 image to a 28x28 image.

Here are some of the raw samples that i recorded from the touchpad:

As you can see, the raw points captured by our touchpad when downsampled to a 28x28 pose a bit of a problem for us.

- Firstly, sometimes you can see skipped pixels, these errors can be attributed to the downsampling process and also because the sample rate of our touchpad is not very high, and if we very quickly swipe our finger across the touchpad, we loose some samples.

- Secondly, these raw sample images have too little data in terms of pixel variations, the very narrow strokes of the touchpad samples are good for getting positioning data but do not resemble an actual pen stroke (something our MNIST dataset is based on.)

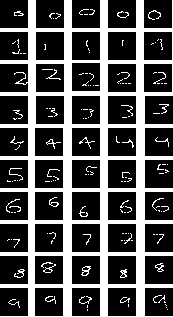

Here is an example of what MNIST dataset contains:

You can see they do not match at all, If we train our NN on MNIST and then validate the NN on our raw samples, we will get very poor accuracy.

Thus we need to do a bit of pre-processing with our raw samples to make them a bit more like MNIST samples.

In we were to do this the right way, we would be collecting our own samples and making a large dataset for them as that would give us the best accuracy but that is a very time consuming task and thus we are cheating a bit here by using both MNIST which has over 60000 samples combined with some of our own raw samples.

Here is our pre-processed version of touchpad data samples, this looks good enough for a demo.

Not perfect, but would do the job. This pre-processing is done in real-time on MCU by applying a 3x3 averaging kernel.

Now we collect and save a lot of these pre-processed images, our MCU simply sends them over USART as a C array and we have a program on host computer that writes this data to a CSV file which can then be used to train our neural network model.

Step 2: Make and Train the model.

I use google colab to test and train models.

Here is the python script for the final version of the model which uses LSTM.

import tensorflow as tf

from tensorflow import keras

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

print("using tf version: ", tf.__version__)

dataset = pd.read_csv("drive/MyDrive/data/data_fat.csv")

x_train_custom = dataset.copy()

y_train_custom = np.array(x_train_custom.pop('0'))

x_train_custom = np.array(x_train_custom)

x_train_custom = np.array(x_train_custom,dtype=np.float32)

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

x_train = x_train.flatten()

x_train = x_train.reshape(60000,784)

x_train = x_train/255

x_train = np.array(x_train,dtype=np.float32)

x_train = np.append(x_train,x_train_custom)

y_train = np.append(y_train,y_train_custom)

x_train = x_train.reshape(60542,784)

dataset = pd.read_csv("drive/MyDrive/data/data_fat.csv")

x_test = dataset.copy()

y_test = np.array(x_test.pop('0'))

x_test = np.array(x_test)

x_test = np.array(x_test,dtype=np.float32)

model = tf.keras.Sequential()

model.add(tf.keras.layers.Input(784,batch_size=1,dtype=tf.float32))

model.add(tf.keras.layers.Reshape((28,28)))

model.add(tf.keras.layers.LSTM(20,return_sequences=True))

# model.add(tf.keras.layers.Conv2D(filters=8,kernel_size=3,padding='same',activation='relu'))

# model.add(tf.keras.layers.MaxPool2D(pool_size=(2,2),strides=(2,2),padding='same'))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(128))

model.add(tf.keras.layers.LeakyReLU(alpha=0.01))

model.add(tf.keras.layers.Dense(10))

model.add(tf.keras.layers.Activation('softmax'))

loss_fn = keras.losses.SparseCategoricalCrossentropy()

model.compile(optimizer='adam',loss=loss_fn,metrics=["accuracy"])

model.fit(x_train,y_train,epochs=5,shuffle=True,validation_data=(x_test,y_test))

model.save("drive/MyDrive/data/tp.model")

def representative_dataset():

for data in x_train[:100]:

yield [data.reshape(1, 28, 28)]

converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.int8

converter.inference_output_type = tf.int8

tflite_model = converter.convert()

# Save the model.

with open('drive/MyDrive/data/model.tflite', 'wb') as f:

f.write(tflite_model)

Few things to note here,

We use google drive to host our custom dataset in a csv file, which we then read from and combine the custom data with the one from MNIST dataset.

I tried 3 different models each with different pros and cons.

First a simple FFN with 2-3 Dense layers, which was very fast to run inference on(5ms) and had small size but most of the time had overfitting issues which led to poor real-world accuracy.

Then 1-2 Conv2D layers model was used instead of simple Dense layer, which offered high accuracy among real world input but was very slow to infer(0.5-3 seconds) and was huge in size (2-4MB).

Finally a single LSTM layer was used which gave us high enough accuracy(96%) and was quicker to run inference on (~150ms) and had moderate size(400kB);

All of the above performance figures were on non optimized models running on FP32 data.

Huge performance and memory gains were seen when converting the model to run on INT8 instead of FP32.

This is because TFLM can utilise the CMSIS-NN library which uses the native fixed point integer SIMD instructions(DSP extentions) present in Arm Cortex M7 and M4, which can help accelerate a lot of operations.

LSTM models inference time decreased to 7ms from 150ms, and size became ~80k from ~330kb.

Accuracy remained almost the same as humanly perceived,

The output of this training process gave us our model.tflite file which was then converted to a C array using xxd tool.

xxd -i model.tflite > model.h

This header file was included into our MCU project.

Step 3: Run inference on the trained model.

A condensed version of the code that runs on the MCU is shown below.

#include "model.h"

const int tensor_arena_size = 32768 * 2;

uint8_t tensor_arena[tensor_arena_size];

.

.

.

//Initialise TFLM

tflite::MicroErrorReporter micro_error_reporter;

tflite::ErrorReporter *error_reporter = µ_error_reporter;

const tflite::Model *model = tflite::GetModel(model_tflite);

tflite::AllOpsResolver resolver;

tflite::MicroInterpreter interpreter(model,resolver,tensor_arena,tensor_arena_size,error_reporter);

interpreter.AllocateTensors();

.

.

.

//somewhere in the code where PS/2 data is captured and pre-processed and stored in a array of floats

.

.

.

//Runs in a loop..

TfLiteTensor *input = interpreter.input(0);

//load our pre-processed sample data into the tensor

for(uint32_t i = 0; i < 784; i++)

input->data.int8[i] = (*((float*)img + i) * 255) - 128;

//run the inference

interpreter.Invoke();

TfLiteTensor *output = interpreter.output(0);

int8_t vmax = -128;

uint32_t max_index = 0;

//find the max in the output tensor, its index is our prediction..

for(uint32_t i = 0; i < 10; i++)

{

if(output->data.int8[i] > vmax)

{

vmax = output->data.int8[i];

max_index = i;

}

}

//clear frame

for(uint32_t x = 0; x < 28*28; x++)

{

*((float*)img + x) = 0;

}

//do whatever you want with the prediction..maybe show it on a screen?

In the actual demo whose video is shown above, all the inference is run on a separate FreeRTOS task whereas the UI is rendered on a separate task.

But why run Neural Networks on MCUs?

You don’t need to.

Machine learning is just another tool in an engineers toolbox. Some problems are better suited for it, some aren’t.

When we are talking about a resource constraint environment such as a Microcontroller, we have to choose wisely where to effectively use the power of ML.

The compute power provided by even the most powerful MCUs on the market today is not enough to run complex but optimized image classification networks with more than 3-6 FPS, But if you choose your battles wisely, you can get away with quite good results.

Some tasks can simply use DSP and other classification techniques to make predictions which can be faster than running ML inference.

ML shines when we need to combine data from multiple sensors to make a common predictions, which can be difficult to do in traditional way.

Anyways.. MCU manufacturers are aware of the lack of compute power in current MCUs and are investing in IPs to help accelerate AI workloads on the edge.

ARM has launched Cortex-M85, its latest core which includes Helium SIMD support which will further improve performance, coupled with the Ethos-u65 NPU we might just have enough power in our hands.

Manufacturers such as NXP and STM are also coming our with their own proprietary NPU IPs in general MCU.

Lets see what the future holds…