When was the last time you heard about GPUs and MCUs in a sentence?

Well, today we are going to talk about how the landscape is changing in the embedded world with the introduction of more and more powerful microcontrolers, some of which even feature dedicated GPUs for accelerating graphics processing!

A lot of modern problems require low power solutions with rich graphical interfaces, People have become accustomed to rich UIs due to the smartphone revolution, and thus expectations of smart devices around us to have fluid smartphone like UIs have become a trend.

Advanced silicon nodes (28nm FD-SOI) enable the production of devices that use very little power but are also capable of high clock speeds.

Thus, manufacturers are moving towards more and more powerful microcontrollers, which blur the gap between previous low end application processors.

The task that would have required an ARM Cortex A7 based system with a GPU running Linux can now be done on an ARM Cortex M7 with a GPU running RTOS.

But why does an MCU need a GPU?

Traditionally, all user interfaces on microcontrollers are composed using software rendering.

That means the CPU does all the rendering, which, as we scale the resolution, becomes quite difficult for even a powerful MCU core.

Add to this the complex graphical operations of rotation, scaling, alpha blending, etc., and it becomes difficult to even extract a few FPS.

As lots of time is spent rendering the UI, this affects other real time tasks that the system is actually designed to solve.

Thus, switching from software rendering to GPU based rendering frees up system resources.

AFAIK, currently only 3 microcontroller vendors offer GPU support in MCUs.

- Microchip PIC32MZ DA

- NXP I.MX RT (VGlite)

- STM32U5 (NeoChrome)

Out of these, the PIC’s GPU is the most basic, offering only raster operations.

NXP and STMs solutions are almost identical, offering both vector and raster operations.

This article will focus on NXP’s solution, which is called VGlite, and is a licenced IP of VeriSilicon’s GCNanoUltraV called GC355.

This is a 2.5D GPU offering a whole suite of accelerated 2D graphics operations on both vector and raster graphics.

The 2.5D nature of the GPU is because of its ability to allow the rendering of vector and raster graphics with rotation along any axis and with the ability to render perspective correct transformation. This allows us to achieve semi-3D effects using 2D graphics.

So let’s have a look at what NXP’s VGLite has to offer us.

The Setup

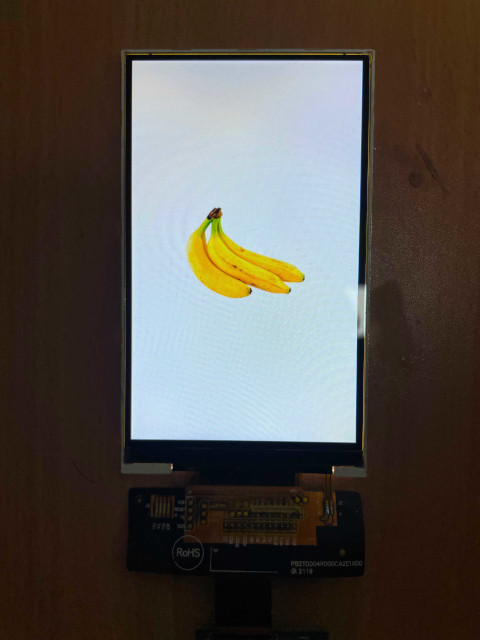

Our setup today will consist of a MIMXRT1166 devkit, which is hooked up to a LCD with a FFC and a custom PCB in between to mate the two different LCD connectors and handle backlight control.

The LCD is a 4.0 inch IPS LCD with a resolution of 480 x 800 pixels.

The LCD is driven over MIPI-DSI.

blit blit blit…

One of the most common graphical operations is to draw an image on a screen, In the graphic world, there is no screen, only a frame buffer.

A computer cannot directly render a jpeg or a png, It needs to first convert (or decompress) it to a format that it understands.

A bitmap is one of the most basic formats that an image can be converted to.

In essence, a bitmap essentially just contains an array of RGB values stored as 8 bit unsigned integers.

The size of the array is width of image * height of image * 3 (3 bytes per pixel as we are using RGB).

This format is known as the RGB888 format, meaning bytes are stored in the order of red first, then green, and then blue, and each occupies 8 bits.

There are lots of other formats; some common ones are RGBX8888, ARGB8888 (A stores the Aplha value used for transparency), RGB565, RGBA4444, YUYV, etc..

Blit is an operation that renders a source bitmap to a destination bitmap with some kind of compositing/blending operation in between.

Let’s use VGlite to create a bitmap for us that is in RGBA8888 format, and has a size of 256x256.

As you can see, the image was rendered perfectly alpha blended to the white background.

One weird thing is that the grid-like pixelation artefacts you see in the above image are not really visible in real life, and the display is super sharp, I guess this is some optical effect of capturing displays via an image sensor? (EDIT: it’s called moire effect)

Remember Matrix?

No, not this one, damn you! The one in math!

Matrix math enables us to easily apply dimensional transformations to objects in 2D and 3D space.

This can be rotation, scaling, translation, projective transformation, etc..

VGlite has the ability to transform raster and vector objects via matrix transformations.

Let’s first centre the image on the screen, this uses the matrix translation operation.

Now let’s try to rotate the image on the Z axis, the most common 2D rotation..

We can also scale the image, let’s give that a shot..

And finally.. let’s look at non-affine projective transformation, the real 2.5D feature of this GPU..

This allows us to mimic image rotation in any axis, making it look and feel like 3D.

Now that’s cool, isn’t it?

But I want more! You say!

Don’t worry, VGLite also has the ability to render vector graphics.

With vector graphics, you create a path for the GPU to draw by giving it a set of commands such as:

- move_to(x,y)

- line(x,y)

- quad_curve(x,y)

- cubic_curve(x,y)

- arc(rot,x,y)

- end

- etc…

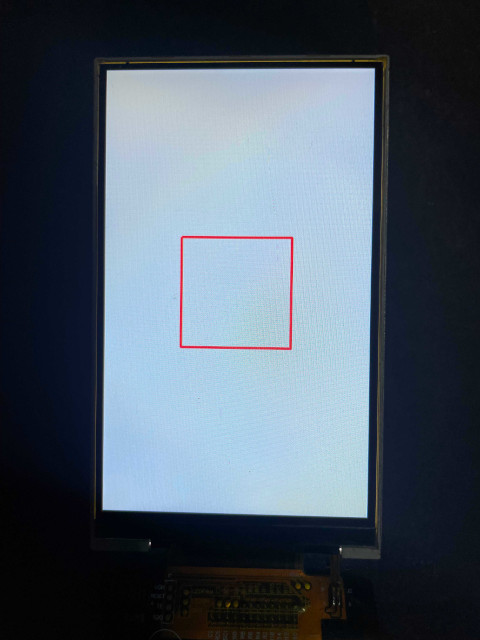

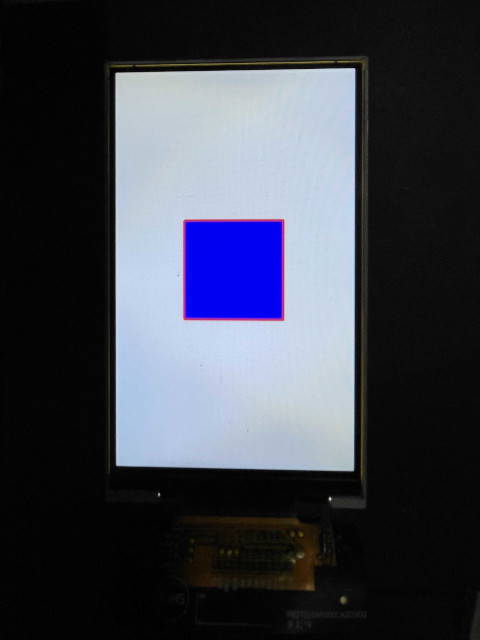

Combining these commands, you can create any shape you want, You can then tell the GPU if you want to stroke the path or fill the path.

The stroke operation is used to draw only the outline of the path that you described, a fill will also fill the area formed inside the path with a solid colour,a gradient, or an image texture.

Here is an example of a square rendered by the stroke operation, with red as the stroke colour and a thickness of 5.

Here is an example of the same path, but this time it will fill the operation, with blue.

You can even combine the two operations.

And of course, you can apply matrix transformations to these vector paths as well..

You can also convert SVGs to the data format that the VGLite path structure expects and render them too… Although the tool to do this has not been released by NXP yet.

Here is the standard SVG tiger in blue.

That’s all good, but what about text, eh?

Text is normally a big issue with any embedded GUI framework based on traditional software rendering.

Text is usually drawn by exporting the glyphs of a specific font (TrueType, OpenType, etc.) for a particular font size to a bitmap array.

Individual characters from this bitmap array are then drawn at runtime based on the supplied text string.

This has multiple issues:

- Each font needs its own bitmap array.

- Even in the same font, different font sizes require different bitmap arrays.

- Arbitrary rotation of fonts for portrait/landscape conversion is not possible or too slow.

- Advanced effects such as gradients on font colour are not possible at runtime.

- In some cases, font colour cannot be changed at runtime.

- huge amount of memory is thus required to store multiple fonts.

Because VGlite supports the rendering of vector graphics, it solves these issues:

- Only a single special vector font array is required.

- Font size can be changed at runtime.

- Arbitrary rotation can be applied at runtime.

- Advanced effects can also be applied at runtime with a bit of performance loss.

- Font colour can be changed at runtime.

- In some cases, the memory required can be less.

Here is an example of a text string drawn using VGLite with varying size and rotation effects applied.

Although I must tell you that this process of converting a TrueType font to a format that VGlite expects is not simple, It requires a special tool that does the conversion to a intermediate format that then needs to be stored as a C array to be loaded. into VGlite.

The issue is that this tool is not currently released, but a beta version is available in NXP’s forum.

So after all this, you know the basics of what VGlite can do for you, although it can do a lot more, which I have not covered here.

This gets us to our final conclusion for the day.

- GPUs in microcontrollers enable us to use advanced graphics features to make rich, fluid UIs.

- GPUs offload graphics rendering and free up the CPU for other stuff.

- GPUs in MCUs are not magical, proper optimisations are still needed to have a 60FPS experience.

- Memory management is still a priority, Graphics require heavy memory utilisation to store all assets.

- Memory bandwidth has an effect on performance, Assets in internal SRAM/external SDRAM perform better than in FLASH.

- Memory coherence needs to be taken into account.

- Overall performance is limited by memory bandwidth and system architecture, SDR-SDRAM’s limited bandwidth and internal system bus architecture cause the main bottleneck in MCUs.

- In NXP’s case, multiple graphics peripherals exist, each with its own set of pros, For optimal use, the combination of multiple peripherals can split workload and boost performance.

Thats it for today, folks!